Part Two: Approaching the problem without rewriting existing code

Welcome back to the second part of SILK for CFCR. If you just stumbled upon this, it might be a good idea to check out the first blog-post explaining the project.

For you TL;DR; kind of people:

Here is the ci, here are the wrappers, and finally here is the customized cfcr-release.

As with every new codebase you touch: In the beginning, there was confusion. We quickly realized that we’re not just going to change a few lines to make it work. Furthermore, we’re going to have to touch code in several places and projects to achieve our goal.

Baby steps…

A few things were clear and a good place to start:

- we need a working Cloud Foundry

- a working CFCR.

Once again we combined Bosh-BBL and BUCC to quickly deploy our infrastructure and base environments. We created two pipelines: CF and CFCR and let Concourse take care of the rest.

Hacking it into obedience, it’s just a CNI they said…

This is where it gets complicated and where we hit the first bumps in the road. We knew we’re working on exchanging a CNI named Flannel in our CFCR-deployment with a CNI named SILK that is used on DIEGO-Cells. To be honest, that was about all we knew…

To get an idea, we started searching for keywords: network/cni/flannel in the releases and deployment manifests we already used: cf-deployment; cf-networking-release; docker-release; kubo-release. While narrowing our scope, this was still plenty.

As a first milestone, we had to find how and where Flannel is utilized within CFCR and how we configure a CNI in Kubernetes and Docker. We focused our research in two directions: What would Flannel do and any mention of a CNI?

What would Flannel do…

We looked at the Flannel specific code in the CFCR-Repo and the Flannel-Docs. By approaching the problem with a solution, we could easily identify the key points we had to figure out and it gave us a roadmap to follow.

First, we found that Flannel writes a config file within its jobs-ctl. This config is part of the CNI Specification. It is written into a Kubernetes default path for CNI configs, thus our Vanilla-CFCR picked it up without any extra config. A CNI-Config contains the field ‘type’ and the value of that field maps to a (CNI) binary name. This way Kubernetes (and every other CNI Consumer) know what to call. Next, we found that the BOSH Docker-Release has Flannel specific settings which we deactivated. This basic info on how Flannel hooks into Kubernetes/Docker was enough to start digging into how SILK hooks into DIEGO/Garden.

So far for our initial research.

A CNI for Kubernetes…

Our next goal was to be able to reconfigure CFCR with a different CNI config. We updated our kubo-release to include the required (CNI-related) config options that we found in the Kubernetes documentation. To be able to configure SILK we needed to add parsing for a few Kubelet flags:

- cni-bin-dir, cni-conf-dir (to be able tJust give me an IP Address already ¶

At this point the basics were in place and we concentrated on “just getting an IP” for our Pods. We kept it simple and just reused the SILK CNI-Config we found on the DIEGO-Cells and pointed Kubelet to our SILK binaries and config. To no ones surprise, it broke. But it left fresh interfaces and according network-namespaces on our Kube-Wo provide a different CNI and its config) - proxy-mode and hairpin-mode (to be able to adjust kubelet and kube-proxy config for SILK).

Initially, we thought that we would also require a custom Docker release. Eventually, we realized that changing a few networking related settings in the Docker Release was all that it took:

- Deactivate Flannel property

- Deactivate Docker Iptables and IP-Masquerade

We started creating our local/custom releases which we referenced in the CI:

- Kubo (CI)

- SILK-Patches (CI)

To use SILK on our CFCR Cluster, it required a few more jobs and their packages from cloudfoundry/silk-release:

We did not care much for a working config in the beginning, we had no idea how it could look anyway. At this step, automation was added to be able to quickly cleanup and repave a playground once we tried a change too many.

Just give me an IP Address already

At this point the basics were in place and we concentrated on “just getting an IP” for our Pods. We kept it simple and just reused the SILK CNI-Config we found on the DIEGO-Cells and pointed Kubelet to our SILK binaries and config. To no ones surprise, it broke. But it left fresh interfaces and according network-namespaces on our Kube-Worker. This outcome was a lot more than we expected.

Specifically, it broke because Kubelet had trouble parsing out the IP from the created interfaces. A good thing about stuff that breaks, is that it rarely does so silently. We looked at our logs and searched for the relevant code that could produce these. We found that Kubelet looks up the IP-Address of a Pod’s interface by shelling out to the “ip” and “nsenter” binaries. We compared the interface within a runC/SILK vs. a Docker/Flannel Container and found that the main difference is the scope. SILK creates an interface with scope “link” while the interface created by Flannel has scope “global”.

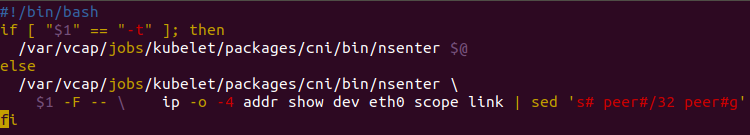

We created a shell-wrapper for nsenter on our Kube-Worker and analyzed how kubelet was trying to call nsenter/ip commands and what parameters were provided. This way we could iterate fast without redeploying or compiling. Further, we manually reproduced the output of these calls against a Flannel interface (to learn about expected output). With a bit more understanding of what is supposed to happen, we created this wrapper for “nsenter”:

We included the wrapper into our release and the CI redeployed. After a few minutes of waiting, we started seeing the first Pods come up with IPs from SILKs CIDR. From a functional point of view, we had created interfaces on the Kube-Worker but we were far from implementing the Kubernetes or Docker networking model.

Thank you for reading and stay tuned for the upcoming post:

Part one: Building bridges in the cloud

Part Two: Approaching the problem without rewriting existing code

Part three: There is no better way to learn than trial and error