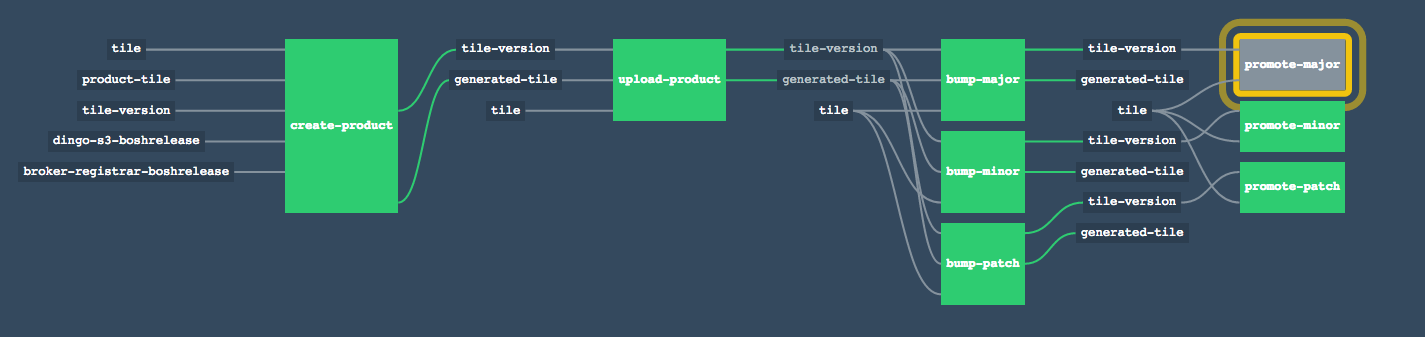

In the development and release of Dingo S3 we developed a Concourse CI pipeline for building, uploading and installing Pivotal tiles. In this blog post I wanted to share some of the tools and APIs that we used. This is useful for all PCF/Ops Manager admins who can create their own pipelines or CI jobs to automate the installation/upgrade of Pivotal tiles.

If you’d like us to join your team for a few months and help you script and automate your own Pivotal Cloud Foundry foundations then please contact us today.

Building new tiles

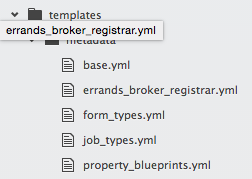

The Pivotal Partner documentation describes the make up of a Pivotal tile. They are .zip files re-suffixed as .pivotal. They contain the BOSH releases being used, a metadata YAML file, and a description of how the tile can migrate/upgrade from previous versions. Both the product template reference documentation and the example tile project are excellent sources of information and examples.

Each time through our pipeline we must create the large metadata YAML file. We use spruce to merge together smaller, easier to read, YAML files. Some originate from source control, others are generated by the CI job during the pipeline – e.g. the product_version will include a build number, and releases includes the specific BOSH release versions being bundled.

Up to Pivotal Ops Manager v1.6 (most recent at time of writing) there is a complex content_migration YAML file that describes how this new tile version can upgrade from a very specific set of previous versions. If you get this wrong then Ops Manager will complain that your existing running tile cannot be upgraded to the new tile. So you need to ensure that you keep track of all versions that have ever been published/distributed; and the version(s) that are currently deployed internally by your pipeline.

Our current solution is to use aws CLI to look into the AWS S3 buckets that store the generated dingo-s3-X.Y.Z-rc.A.pivotal and dingo-s3-X.Y.Z.pivotal tiles to collect the full list of all previous versions. We can then generate a content_migration file that explicitly supports an upgrade migration from all known past versions to the new version.

In Pivotal Ops Manager v1.7 (not yet released at time of writing) it looks like this content_migration concept goes away and is replaced by optional JavaScript-based migration functions. Optional. Hurray! My assessment is based on the master branch of the example tile repository. Afaik there has not been an announcement on this change; but watching the evolution of this example repo is a good indicator about the future of Pivotal Ops Manager.

Our pipeline lays out the BOSH releases, metadata and content_migration files into the correct folder structure, zips them up, re-suffixes it to .pivotal and uploads it to our private Amazon S3 bucket.

Automating with the Ops Manager API

There are two ways to automate Pivotal Cloud Foundry, or specifically, Pivotal Ops Manager:

- the Ops Manager API

- the Ops Manager web UI

Each Ops Manager includes an API, and the documentation for that API. Navigate to the /docs path of your Ops Manager and it will show you all the API endpoints and how to invoke them. The API itself starts at the /api path.

The API the uses basic auth opsmgr_username and opsmgr_password that you use to login to Ops Manager login page.

Some examples, to get a listing of all product tiles that are uploaded:

if [[ "${opsmgr_url}X" == "X" ]]; then

echo "upload-product.sh requires \$opsmgr_url, \$opsmgr_username, \$opsmgr_password"

exit

fi

if [[ "${opsmgr_skip_ssl_verification}X" != "X" ]]; then

skip_ssl="-k "

fi

curl -f ${skip_ssl} -u ${opsmgr_username}:${opsmgr_password} \

"${opsmgr_url}/api/products"

One handy API function that is not available via the web UI is to delete all unused product tiles. This allows you to re-upload a tile that might have the same version number as an unused tile that’s already uploaded.

curl -f ${skip_ssl} -u ${opsmgr_username}:${opsmgr_password} \

"${opsmgr_url}/api/products" -X DELETE

To upload a .pivotal tile:

curl -f ${skip_ssl} -u ${opsmgr_username}:${opsmgr_password} \

"${opsmgr_url}/api/products" -X POST -F "product[file][email protected]${tile_path}"

There are API endpoints for:

- reviewing what tiles are currently installed (to determine if you are doing an initial installation

/api/installation_settings/products -X POSTor upgrading an installed tile/api/installation_settings/products/${uuid} -X PUT) - triggering the "Apply Changes" installation (

/api/installation?ignore_warnings=1" -d '' -X POST) - get the progressive logs of an installation (

/api/installation/${installation_id}/logs)

If you are writing elaborate bash scripts to do this automation then the jq tool is your best friend.

Automating with the Ops Manager Web UI

Another method we use to automate Ops Manager is to programmatically interact with the web UI – the one that was designed for humans.

The https://github.com/pivotal-cf-experimental/ops_manager_ui_drivers project uses the same concepts and tools as a Ruby on Rails integration test suite to do end-to-end testing of a web applcation – drive the UI interactions inside an invisible/headless browser (such as phantomjs), clicking and selecting and filling in text fields, and then making assertions about what should be expected on the screen.

The great thing about the ops_manager_ui_drivers project is that it supports many older versions of Ops Manager, even though the Web UI has evolved over time.

We’ve used the phantomjs headless browser in our pipelines because it is the easiest to get installed into the Docker images that we use for our Concourse CI tasks.

There have only been one or two reasons that we’ve needed to use the capybara project rather than the API, so I’ll only mention it here as an option.

On RubyGems there appears to be a project opsmgr that depends on ops_manager_ui_drivers, but there is not a public repository for it. But download the gem, open up the RubyGem source and you’ll find your way about.