This is the fourth part of a multi–part series on designing and building non-trivial containerized solutions. We’re making a radio station using off-the-shelf components and some home-spun software, all on top of Docker, Docker Compose, and eventually, Kubernetes.

In this part, we’ve got a working system, our own rebuildable images, and a portable Docker Compose deployment recipe. What more could we want? A web interface for managing the tracks that we’re streaming would be nice…

The Icecast web interface is, well, usable, but it does leave a fair amount to be desired. This is the part of systems design where I usually take a step back, see what’s missing, and then focus my software development energies on solving those deficiencies.

As a radio station operator, I would like to…:

- Exert fine-grained control over the tracks that my radio station plays.

- Use my web browser to add new YouTube tracks to my radio station.

That’s it; I’m a simple man, with simple needs. I can write a small-ish web app to do these things, and give it read-write access to the /radio volume.

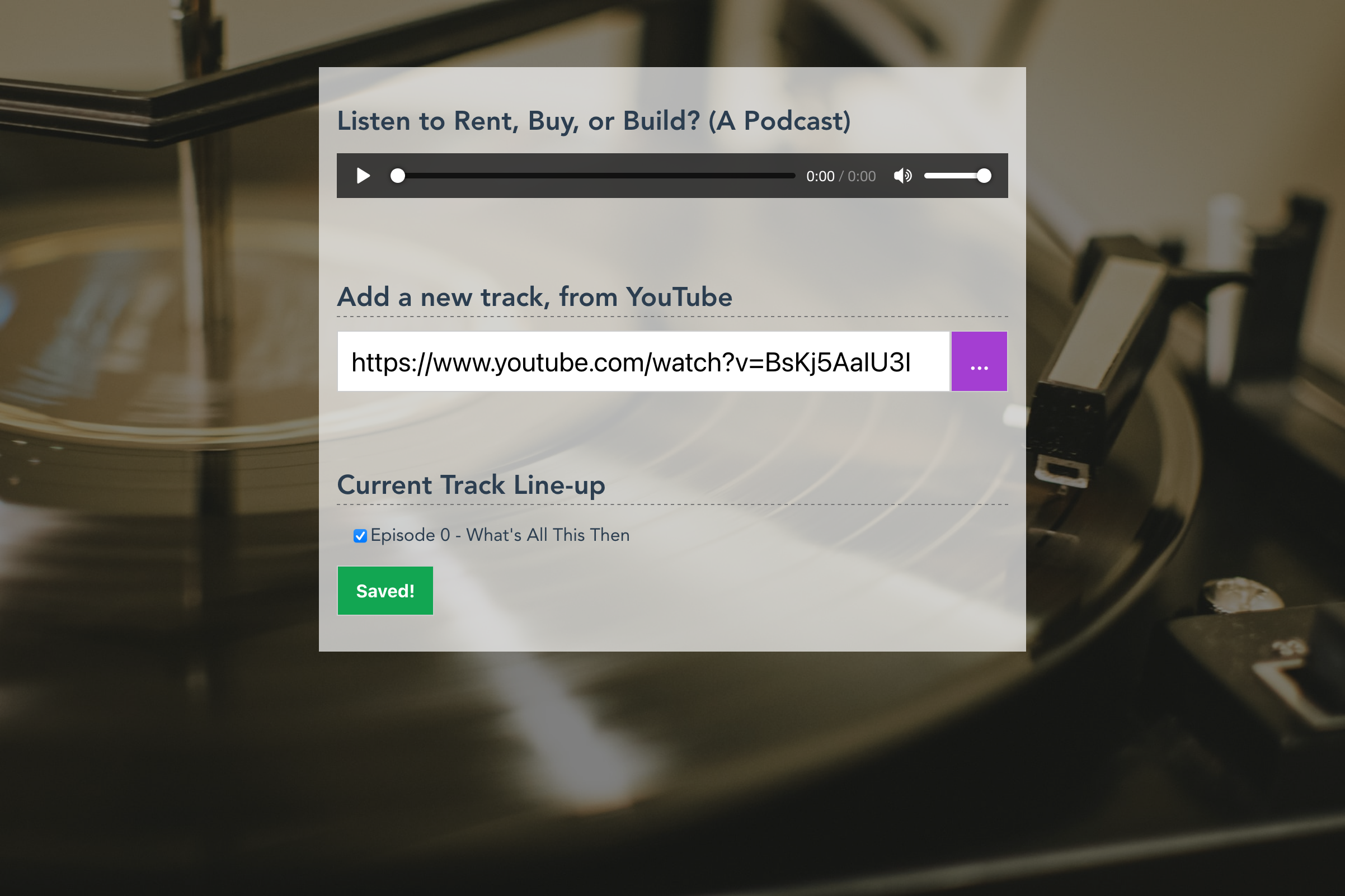

And here it is:

I call it MixBooth. It’s on GitHub too.

MixBooth is a small Vue.js front-end backed by an even smaller Go HTTP (REST) API. The backend piece consists of only three endpoints:

GET /playlistThis one retrieves the current playlist. The Vue bits render this on the bottom half, under the Current Track Line-Up heading. The checkboxes are there so that you, intrepid Radio Disk Jockey that you are, can remove tracks from the rotation, and add them back in, by way of our next endpoint:

PUT /playlistTired of the same song popping up? Yank the cassette!

Finally, to get new stuff into the station, from YouTube, we have:

POST /uploadFor a deeper dive into the code, check out the GitHub repository.

To containerize this thing, we’re first going to look at our Dockerfile. Keep in mind I specifically wrote this piece of software to work with this radio station deployment. I was “filling in the gaps” so to speak. This is a long Dockerfile – the longest we’ve seen so far – so we’ll take it in parts.

Our first part is the api stage. Here, we build the Go backend application, using tooling that should be familiar to most Go programmers (even terrible ones like me):

FROM golang:1.15 AS apiWORKDIR /app

COPY go.mod .

COPY go.sum .

COPY main.go .

RUN go buildOur next stage (in the same Dockerfile) builds the Vue component, using tooling that is recognizable to Node developers (but maybe not to the aformentioned Go rockstars):

FROM node:15 AS ux

WORKDIR /app

COPY ux .

RUN yarn install

RUN yarn buildFinally, in the last stage, we’ll tie it all back together, copying in assets from our build stages, with some Ubuntu packaging:

FROM ubuntu:20.04

RUN apt-get update \

&& DEBIAN_FRONTEND=noninteractive apt-get install -y python3 python3-pip ffmpeg \

&& pip3 install youtube-dl \

&& apt-get remove -y python3-pip \

&& apt-get autoremove -y \

&& rm -rf /var/lib/apt/lists/*

COPY --from=api /app/mixbooth /usr/bin/mixbooth

COPY --from=ux /app/dist /htdocs

COPY ingest /usr/bin

EXPOSE 5000

ENV HTDOCS_ROOT=/htdocs

CMD ["mixbooth"]Remember: we need Python (and PIP!) for the youtube-dl bits; this web UI literally shells out to run youtube-dl when you ask to ingest new tracks.

In fact, let’s take a closer look at that ingest script we’re copying in.

#!/bin/bash

set -eu

mkdir -p /tmp/ytdl.$$

pushd /tmp/ytdl.$$

for url in "[email protected]"; do

youtube-dl -x "$url"

for file in *; do

ffmpeg -i "$file" -vn -c:a libopus "$file.opus"

mv "$file.opus" $RADIO_ROOT/

echo "$RADIO_ROOT/$file.opus" >> $RADIO_ROOT/playlist.m3u

done

done

popd

rm -rf /tmp/ytdl.$$I want to point out that instead of hard-coding the radio files mountpoint to something like /radio, I chose to rely on the $RADIO_ROOT environment variable instead. We’ll use this in our next section, when we add the web interface container into our larger deployment.

Composing the Web UI

Let’s get this web interface into the mix from a Docker perspective, shall we?

Here’s the Compose file we ended up with from the last post:

---

version: '3'

services:

icecast:

image: filefrog/icecast2:latest

ports:

- '8000:8000'

environment:

ICECAST2_PASSWORD: whatever-you-want-it-to-be

source:

image: filefrog/liquidsoap:latest

command:

- |

output.icecast(%opus,

host = "icecast",

port = 8000,

password = "whatever-you-want-it-to-be",

mount = "pirate-radio.opus",

playlist.safe(reload=120,"/radio/playlist.m3u"))

volumes:

- $PWD/radio:/radioLet’s add a new web service, using the published Docker image:

---

version: '3'

services:

# in addition to the other services, here's a new one:

web:

image: filefrog/mixbooth:latest

environment:

RADIO_ROOT: /radio

MIXBOOTH_STREAM: '//{host}:8000/pirate-radio.opus'

ports:

- 5000:5000

volumes:

- $PWD/radio:/radioThis will spin up the (public) MixBooth image, and bind it on port 5000. We point to the same host path ($PWD/radio) as we used for the LiquidSoap container – we need them to both be looking at the exact same files, so that we can add new tracks for the stream source to pick up, and modify the playlist it uses.

We also added the $RADIO_ROOT environment variable, since MixBooth has no preconceived notions of where the audio files ought to go. We also set the funny-looking $MIXBOOTH_STREAM environment variable like so:

MIXBOOTH_STREAM: '//{host}:8000/pirate-radio.opus'This is highly-specific to what MixBooth does. The embedded player for the radio station, is little more than an HTML 5 <audio> element with the appropriate stream source elements. The heavy lifting is done by the browser – thankfully! However, much as it was clueless about where the audio tracks should live, it likewise flummozed by where, precisely, one would go to listen to those tracks.

This $MIXBOOTH_STREAM environment variable encodes that information, but it does so with some late-binding templating. More precisely, the {host} bit will be replaced, by the Javascript in the visitor’s browser, with whatever hostname they used to access the web interface itself. Come in by IP? Hit the Icecast endpoint by IP. Used a domain name and TLS? Listen in secure comfort, oblivious to the numbers that underpin the very Internet.

These were both conscious design decisions made (by me) while implementing this missing piece of the puzzle. By abstracting the site- and station-specific configuration out of the code, and even out of the “configuration” (such as it is), I was able to make the deployment more cohesive and explicit. Since you can’t not spell out precisely where the wiring goes, the resulting docker-compose.yml is much easier to understand.

(If you’re into software engineering self-reflection, this forthrightness is aimed squarely at reducing Action At A Distance.)

Here’s the final Compose file; take it for a spin and see what you think!

---

version: '3'

services:

icecast:

image: filefrog/icecast2:latest

ports:

- '8000:8000'

environment:

ICECAST2_PASSWORD: whatever-you-want-it-to-be

source:

image: filefrog/liquidsoap:latest

command:

- |

output.icecast(%opus,

host = "icecast",

port = 8000,

password = "whatever-you-want-it-to-be",

mount = "pirate-radio.opus",

playlist.safe(reload=120,"/radio/playlist.m3u"))

volumes:

- $PWD/radio:/radio

web:

image: filefrog/mixbooth:latest

environment:

RADIO_ROOT: /radio

MIXBOOTH_STREAM: '//{host}:8000/pirate-radio.opus'

ports:

- 5000:5000

volumes:

- $PWD/radio:/radioNext time, we’ll pick this whole deployment up and dump it onto the nearest convenient Kubernetes cluster – stay tuned!